Your website has 10,000 pages. Maybe 50,000. Hell, I've worked with university systems that have hundreds of thousands of URLs floating around in their index. And yet? You're getting buried by smaller competitors with a fraction of your content.

Here's the thing nobody wants to admit: large websites don't automatically win at SEO just because they're large. In fact, most of the time, scale works against you if you don't have the technical infrastructure to support it.

So let's talk about the 10 reasons your massive government agency, higher education institution, or enterprise website is underperforming, and more importantly, what you can actually do about it.

1. Your Crawl Budget Is Being Wasted on Garbage Pages

Google doesn't have infinite time to crawl your site. Shocking, I know. When you've got thousands (or tens of thousands) of pages, search engines allocate a specific "crawl budget", basically, how many pages they're willing to look at during each visit.

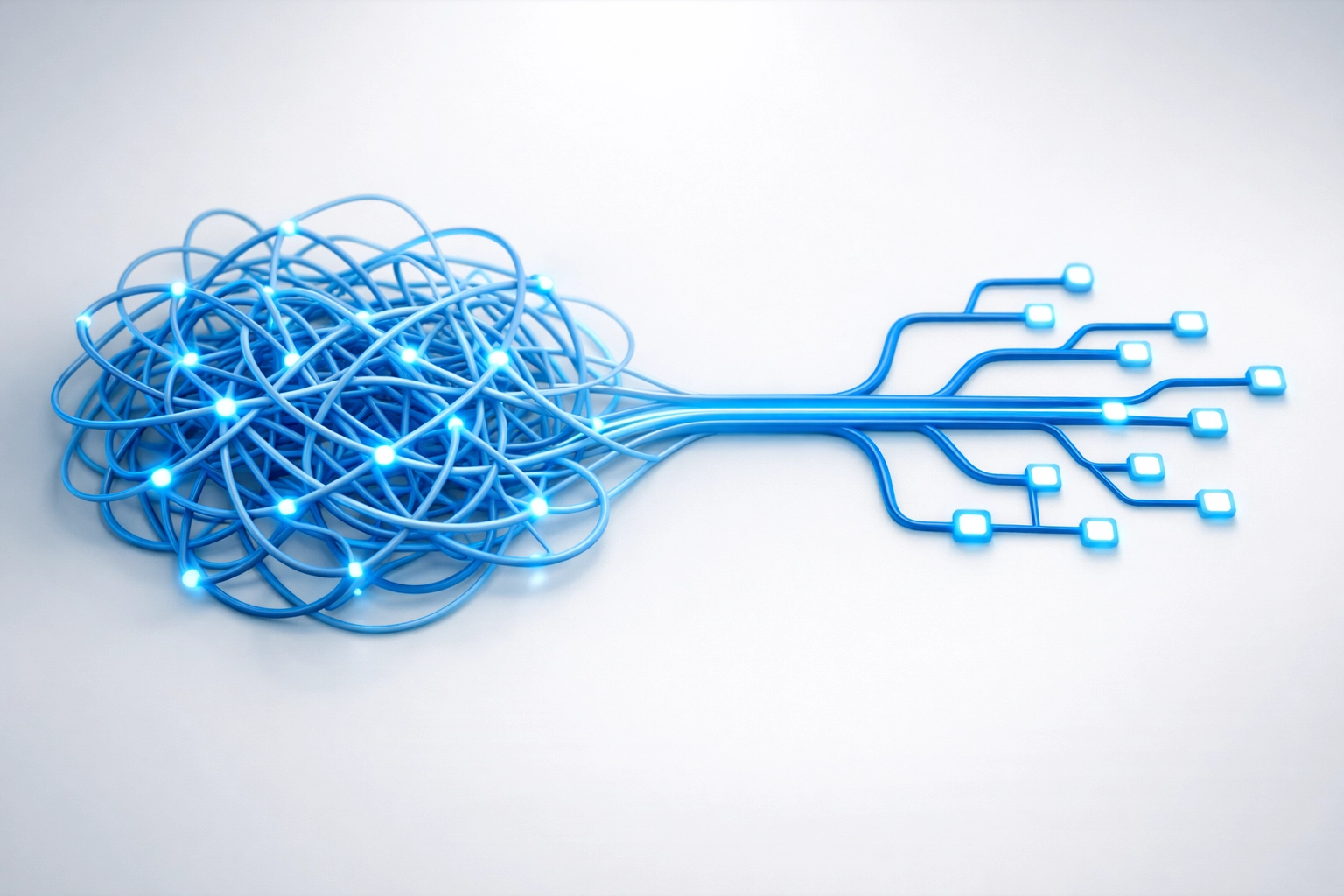

And if your site architecture is a tangled mess? Google's bots are spending that precious budget on pagination pages, session IDs, and duplicate content instead of your important stuff.

The fix: Audit your server logs to see what Google is actually crawling. You'll be horrified. Then use robots.txt and internal linking to guide crawlers toward your high-value pages. Think of it like Marie Kondo-style decluttering, except for URLs.

2. Your Site Architecture Was Designed by Committee (And It Shows)

Ever notice how government and higher ed websites feel like they were built by 47 different departments who never talked to each other? That's because they were.

Your homepage links to 15 different "portals." Each portal has its own navigation structure. Important content is buried seven clicks deep. And Google has no idea what's actually important on your site.

The fix: Implement a clean hierarchy where your most critical pages are within three clicks of your homepage. Use descriptive, logical categories. And for the love of all that is holy, get your departments to agree on a consistent navigation structure. (Good luck with that last one.)

3. You're Drowning in Duplicate Content

Let me guess: your content management system automatically creates multiple URLs for the same content? Maybe you've got www and non-www versions floating around? Or HTTP and HTTPS? Perhaps your events calendar creates a new URL for every date range someone searches?

Duplicate content doesn't just confuse Google, it dilutes your ranking signals across multiple URLs when you need them concentrated on one authoritative version.

The fix: Canonical tags are your friend. So are 301 redirects. Pick one authoritative URL for each piece of content and make everything else point to it. And talk to your IT department about those dynamic URL parameters.

4. Your Page Speed Would Make a Dial-Up Modem Blush

Core Web Vitals aren't just a suggestion anymore. They're a ranking factor. And your 15-year-old website with unoptimized images, render-blocking JavaScript, and a server that takes 4 seconds to respond? It's getting penalized.

I've seen university homepages that take 8+ seconds to load on mobile. That's not just bad for SEO, it's actively driving away prospective students (or citizens, or grant applicants, or whoever you're trying to reach).

The fix: Compress your files. Implement lazy loading for images. Move to a CDN. Enable browser caching. And seriously consider whether you need that 5MB hero image of your building's exterior. (You don't.)

5. JavaScript Is Hiding Your Content from Search Engines

Modern web development loves JavaScript frameworks. And they're great! Except when they prevent search engines from seeing your content because everything loads client-side and Google's crawler gives up before the page finishes rendering.

The fix: Implement server-side rendering or dynamic rendering for your JavaScript-heavy pages. Or, wild idea: maybe that "About the Dean" page doesn't need to be a single-page React app?

6. Your Internal Linking Strategy Is Nonexistent

Internal links are how you tell Google which pages matter. They're how you distribute authority across your site. And on most large websites? They're completely random and inconsistent.

Almost every project I've worked on with government or higher ed clients reveals the same pattern: their most important pages have fewer internal links than random blog posts from 2014.

The fix: Design an automated internal linking system that scales. Create topic clusters where pillar pages link to related subtopic pages. Add contextual links in your content. And for large sites, consider implementing recommendation engines that suggest related content.

7. Your Mobile Experience Is an Afterthought

Google went mobile-first indexing years ago. But your desktop-optimized site with tiny click targets, horizontal scrolling issues, and forms that are impossible to fill out on a phone? Google is judging you based on that mobile disaster, not your beautiful desktop version.

The fix: Responsive design isn't optional anymore. Test your site on actual mobile devices (not just Chrome's device emulator). Make sure form fields are big enough to tap. And please, please make your navigation work on mobile.

8. You Have No Idea What's Actually Indexed

Quick question: how many pages from your site are in Google's index right now? If you said "I don't know" or "all of them," you're probably wrong.

Large sites often have massive indexing issues. Pages that should be indexed aren't. Pages that shouldn't be indexed are. Your XML sitemap hasn't been updated in three years. And nobody's monitoring any of it.

The fix: Set up Google Search Console properly (I know, it seems basic, but you'd be surprised). Submit clean, updated XML sitemaps. Monitor your index coverage reports. Remove low-quality pages from the index using noindex tags.

9. Your International/Multi-Regional Setup Is Broken

Running a university with international campuses? A government agency serving multiple regions? Congratulations: you probably have hreflang tags that are completely misconfigured or missing entirely.

Hreflang errors cause ranking wobbles where Google shows the wrong language version to the wrong audience, diluting your visibility across all regions.

The fix: Implement hreflang tags correctly (it's more complicated than it should be). Test them thoroughly. And if you're serving similar content to different regions, make sure each version has unique, valuable content rather than just slightly tweaked duplicates.

10. Technical Debt Is Crushing You (But Nobody Wants to Talk About It)

Here's the uncomfortable truth: your website is probably running on legacy systems, with patches upon patches, built by contractors who left years ago, and nobody currently on staff fully understands how it all works together.

That technical debt manifests as broken structured data, redirect chains five links deep, orphaned pages, inconsistent URL structures, and a robots.txt file that's blocking half your site by accident.

In a recent meeting with a large state university, they discovered their main site had over 15,000 broken internal links. Not external links: internal ones. On their own site.

The fix: This one requires an investment. You need a comprehensive technical SEO audit that maps out all the issues, prioritizes them by business impact, and creates a realistic remediation plan. You need someone (internal or external) who can coordinate with your development team, test changes before rolling them out, and monitor the results.

The Real Cost of Ignoring Technical SEO

Look, I get it. Technical SEO isn't sexy. It's hard to get budget approved for "fixing hreflang tags" when your VP wants to talk about AI chatbots and viral TikToks.

But here's what I tell every client with a large website: your content strategy means nothing if Google can't crawl, understand, and rank your pages. You can have the best thought leadership, the most comprehensive resources, and the most qualified leads in your space: and it won't matter if your technical foundation is broken.

Government agencies and higher ed institutions especially can't afford to ignore this. You're competing for visibility with better-funded private sector companies who have dedicated technical SEO teams. Your mission might be more important, but Google doesn't care about mission statements.

Where to Start

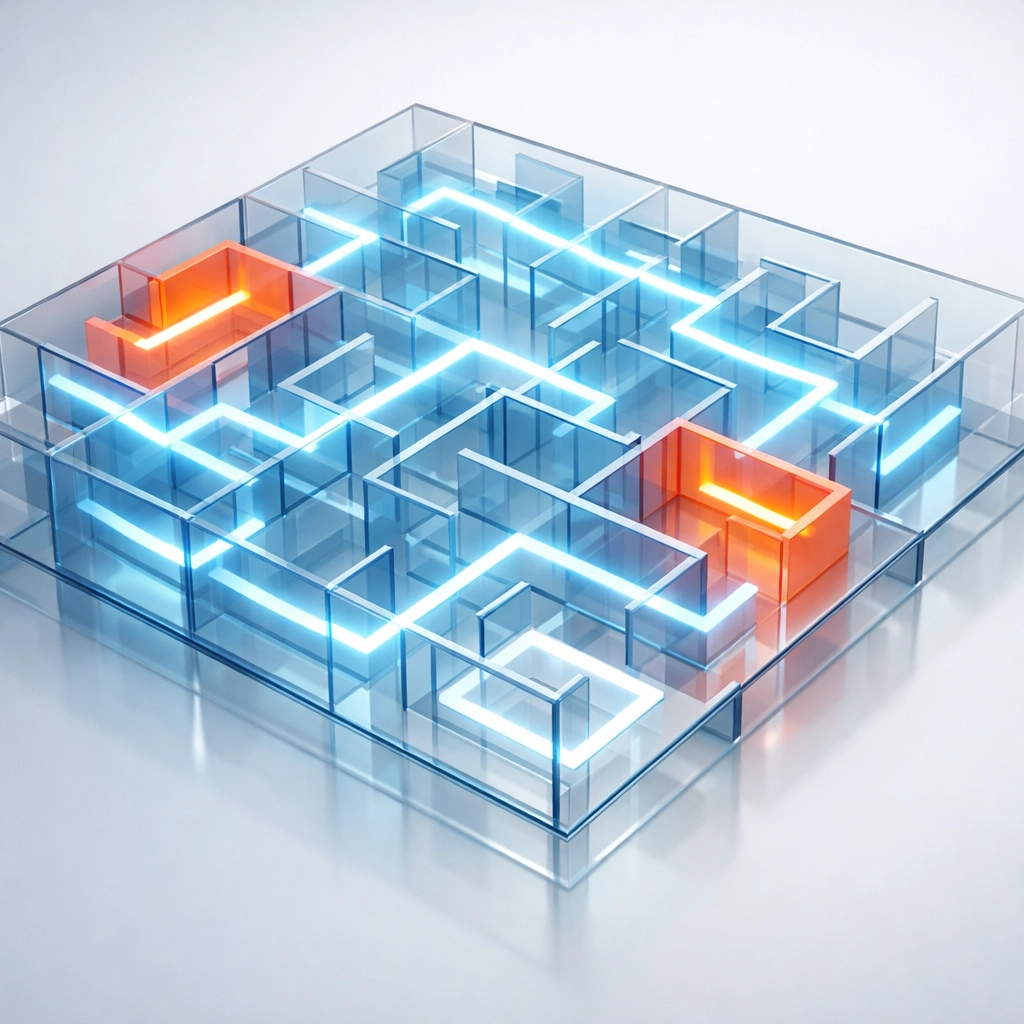

If you're reading this and feeling overwhelmed (and who isn't?), here's my advice: don't try to fix everything at once.

Start with a proper technical audit. Identify which issues are costing you the most visibility. Prioritize fixes based on potential impact versus implementation difficulty. Get your IT and marketing teams in the same room (or Zoom call). And bring in technical SEO expertise if you don't have it in-house: whether that's hiring, training, or working with a consultant who understands the unique challenges of large, complex websites.

The good news? Once you fix these foundational issues, the improvements tend to stick. Unlike content that needs constant refreshing or links that decay over time, technical SEO improvements create lasting competitive advantages.

Your massive website should be an asset, not a liability. But it takes surgical precision and ongoing monitoring to keep it that way.

Need help figuring out where your technical SEO stands? That's literally what we do. Reach out and let's talk about getting your crawl budget under control.